I was reading Ethan’s The Vision Thing blog this morning, and followed a link to two previous posts on folklore processes and identity processes. Brilliant!

Month: November 2005

Service-Oriented Business Architecture

I’ve been doing quite a bit of enterprise architecture work lately for a couple of clients, which has me thinking about how to “package” business processes as “services” for reusability: a service-oriented business architecture (SOBA), if you will. (I have no idea if anyone else has used that term before, but it fits in describing the componentization and reuse of various functions and processes within an organization, regardless of whether or not the processes are assisted by information systems.)

When we think about SOA, we think about automated processes and services: web services that can be called, or orchestrated, to execute a specific function such as mapping a set of input data to output data. SOBA, however, is for all those unautomated or semi-automated processes (what someone in a client IT department once referred to as “human-interrupted” processes) that may be reused, such as a credit adjudication process that requires human intervention. In many large organizations, the same (or very similar) processes are done by different groups of people in different departments, and if they’re not modeling some of this via enterprise architecture, then they likely have no idea that the redundancy even exists. There are exceptions to this, usually in very paper-intensive processes; most organizations, for example, have some sort of centralized mail room and some sort of centralized filing, although there will be pockets of redundancy even in such a structure.

From a Zachman framework standpoint, most web services are modeled at row 4 (technology model) of column 2 (function), whereas business “services” are modeled at row 2 (business model) of column 2. If you’ve spent some time with Zachman, you know that the lower (higher-numbered) rows are not just more descriptive versions of the upper rows; the rows described fundamentally different perspectives on the enterprise, and often contain models that are unique to that particular row.

In talking about enterprise architecture, I often refer to business function reusability as a key benefit, but most people think purely about IT functions when they think about reusability, and overlook the benefits that could arise from reusing business processes. What’s required to get people thinking about reusing business processes, then? One thing for certain is a common process modeling language, as I discussed here, but there’s more to it than that. There needs to be some recognition of business functions and processes as enterprise assets, not just departmental assets. For quite a while now, information systems and even data have been recognized as belonging to the enterprise rather than a specific department, even if they primarily serve one department, but the same is not true of the human-facing processes around them: most departments think of their business processes as belonging to them, and have no concept of either sharing them with other departments or looking for ways to reduce the redundancy of similar business functions around the enterprise.

These ideas kicked into gear back in the summer when I read Tom Davenport’s HBR article on the commoditization of processes, and gained strength in the past few weeks as I contemplate enterprise architecture. His article focused mainly on how processes could be outsourced once they’re standardized, but I have a slightly different take on it: if processes within an organization are modeled and standardized, there’s a huge opportunity to identify the redundant business processes across an organization within the context of an enterprise architecture, consolidate the functionality into a single business “service”, then enable that service for identification and reuse where appropriate. Sure, some of these business functions may end up being outsourced, but many more may end up being turned into highly-efficient business services within the organization.

There’s common ground with some older (and slightly tarnished) techniques such as reengineering, but I believe that creating business services through enterprise architecture is ultimately a much more powerful concept.

More on the Proforma webinar

I found an answer to EA wanna be!’s comment on my post about the Proforma EA webinar last week: David Ritter responded that the webinar was not recorded, but he’ll be presenting the same webinar again on December 9th at 2pm Eastern. You can sign up for it here. He also said that he’s reworking the material and will be doing a version in January that will be recorded, so if you miss it on the 9th you can still catch it then or (presumably) watch the recorded version on their site.

There’s a couple of other interesting-looking webinars that they’re offering; I’ve signed up for “Accelerated Process Improvement” on December 8th.

Ghosts of articles past

This was a shocker: I clicked on a link to test some search engines, typed in my own name as the search phrase, and one of the engines returned a link to articles that I wrote (or was interviewed for) back in 1991-1994. All of these were for Computing Canada, a free IT rag similar to today’s eWeek but aimed at the Canadian marketplace.

Back in 1992, I wrote about the trend of EIM (electronic image management, or what is now ECM/BPM) vendors moving from proprietary hardware and software components to open systems: Windows workstations, UNIX servers, and Oracle RDBMS, for example. This was right around the time that both FileNet and Wang were migrating off proprietary hardware, but both were still using customized or fully proprietary versions of the underlying O/S and DBMS. My predictions at that time (keep in mind that “open systems” was largely synonymous with “UNIX” in that dark period):

Future EIM systems will continue toward open platforms and other emerging industry standards. As the market evolves, expect the following trends.

- More EIM vendors will offer systems consisting of a standard Unix server with MS Windows workstations. Some will port their software to third-party hardware and abandon the hardware market altogether.

- EIM systems will increasingly turn to commercial products for such underlying components as databases and device drivers. Advances in these components can thus be more quickly incorporated into a system.

- A greater emphasis will be placed on professional software integration services to customize EIM systems.

On an open platform, EIM systems will become part of a wider office technology solution, growing into an integral and seamless component of the corporate computing environment.

Okay, not so bad; I can confidently say that all that really happened in the intervening years. The prediction that some of the EIM vendors would abandon the specialized hardware market altogether still makes me giggle, since I can’t imagine Fuego or Lombardi, for example, building their own servers.

In another article that same year, I wrote about a client of mine where we had replaced the exchange of paper with the exchange of data: an early e-business application. I summarized with:

Whenever possible, exchange electronic data rather than paper with other departments or organizations. In our example, Canadian and U.S. offices will exchange database records electronically, eliminating several steps in document processing.

This was just not done on a small scale at that time: the only e-business applications were full-on, expensive EDI applications, and we had to create the whole underlying structure to support a small e-business application without using EDI. What I wouldn’t have given for BizTalk back then.

By early 1994, I’m not talking so much about documents any more, but about process. First, I propose this radical notion:

As graphical tools become more sophisticated, development of production workflow maps can be performed by business analysts who understand the business process, rather than by IT personnel.

I finish up with some advice for evaluating Workflow Management Systems (WFMS, namely, early BPM systems):

WFMS’ vary in their routing and processing capabilities, so carefully determine the complexity of the routing rules and processing methods you require. Typical minimum requirements include rules-based conditional branching, parallel routing and rendezvous, role assignments, work-in-process monitoring and re-assignment, deadline alerts, security and some level of integration with other applications.

I find this last paragraph particularly interesting, because I’m still telling this same story to clients today. The consolidation of the BPM marketplace to include all manner of systems from EAI to BPM and everything in between has led to a number of products that can’t meet one or more of these “minimum requirements”, even though they’re all called BPM.

I’m not sure whether being recognized as an expert in this field 14 years ago makes me feel proud to be one of the senior players, or makes me feel incredibly old!

Through a fog of BPM standards

If you’re still confused about BPM standards, this article by Bruce Silver at BPMInstitute.org may not help much, but it’s a start at understanding both modelling and execution languages including BPMN, UML, XPDL, BPEL and how they all fit together (or don’t fit together, in most cases). I’m not sure of the age of the article since it predates the OMG-BPMI merger that happened a few months ago, but I just saw it referenced on David Ogren’s BPM Blog and it caught my attention. David’s post is worth reading as a summary but may be influenced by his employer’s (Fuego’s) product, especially his negative comments on BPEL.

A second standards-related article of interest appeared on BPTrends last week authored by Paul Harmon. Harmon’s premise is that organizations can’t be process-oriented until managers visualize their business processes as process diagrams — something like not being able to be truly fluent in a spoken language until you think in that language — and that a common process modelling notation (like BPMN) must be widely known in order to foster communication via that notation.

That idea has a lot of merit; he uses the example of a common financial language (such as “balance sheet”), but it made me think about project management notation. I’m the last person in the world to be managing a project (I like to do the creative design and architecture stuff, not the managing of project schedules), but I learned critical path methods and notation — including hand calculations of such — back in university, and those same terms and techniques are now manifested in popular products such as MS-Project. Without these common terms (such as “critical path”) and the visual notation made popular by MS-Project, project management would be in a much bigger mess than it is today.

The related effect in the world of BPM is that the sooner we all start speaking the same language (BPMN), the sooner we start being able to model our processes in a consistent fashion that’s understood by all, and therefore the sooner that we all starting thinking in BPMN instead of some ad hoc graphical notation (or even worse, a purely text description of our processes). There’s a number of modelling tools, as well as the designer modules within various BPMS, that allow you to model in BPMN these days; there’s even templates that you can find online for Visio to allow you to model in BPMN in that environment if you’re not ready for a full repository-based modeling environment. No more excuses.

Proforma Enterprise Architecture webinar

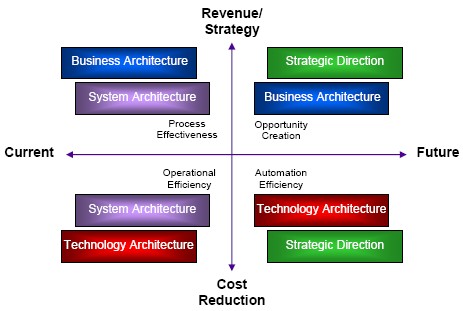

I’ve just finished viewing a webinar put on by Proforma that talks about building, using and managing an enterprise architecture, featuring David Ritter, Proforma’s VP of Enterprise Solutions. He came out of the EA group at United Airlines so really knows how this stuff works, which is a nice change from the usual vendor webinars where they need to bring in an outside expert to lend some real-world credibility to their message. He spent a full 20 minutes up front giving an excellent background of EA before moving on to their ProVision product, then walked through a number of their different models that are used for modelling strategic direction, business architecture, system (application and data) architecture and technology architecture. More importantly, he showed how the EA artifacts (objects or models) are linked together, and how they interact: how a workflow model links to a data model and a network model, for example. He also went through an EA benefits model based on some work by Mary Knox at Gartner, showing where the different types of architecture fit on the benefits map:

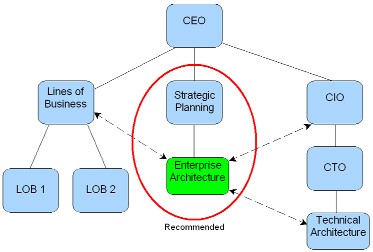

After the initial 30 minutes of “what is EA” and “what is ProVision”, he dug into a more interesting topic: how to use and manage EA within your organization. I loved one diagram that he showed about where EA govenance belongs:

This reinforces what I’ve been telling people about how EA isn’t the same as IT architecture, and it can’t be “owned” by IT. He also showed the results of a survey by the Institute for Enterprise Architecture Developments, which indicates that the two biggest reasons why organizations are implementing EA are business-IT alignment (21%), and business change (17%): business reasons, not IT, are driving EA today. Even Gartner Group, following their ingestion of META Group and their robust EA practice earlier this year, has a Golden Rule of the New Enterprise Architecture that reflects this — “always make technology decisions based on business principles” — and go on to state that by 2010, companies that have not aligned their technology with their business strategy will no longer be competitive.

Some of this information is available on the Proforma website as white papers (such as the benefits map), and some is from analyst reports. With any luck, the webinar will be available for replay soon.

Outstanding in Winnipeg

I understand that PR people have to write something in press releases, but this one today really made me laugh: ebizQ reports that HandySoft just installed their BizFlow BPM software at Cambrian Credit Union, “the largest credit union in Winnipeg”. You probably have to be Canadian for this to elicit spontaneous laughter; the rest of you can take note that Winnipeg is a city in the Canadian prairies with a population of about 650,000, known more for rail transportation and wheat than finance, and currently enjoying -10C and a fresh 30cm of snow that’s disrupting air travel. In fact, I spoke with someone in Winnipeg just this afternoon and he laughed at my previous post about my -20C boots, which he judged as woefully inadequate for any real walking about in The ‘Peg. Every one of my business-related trips to Winnipeg have been in the winter, where -50C is not unheard of, and although most of my clients there have been financial or insurance companies — and large ones — it’s not the first place that I think of when I think of financial centres where I would brag about installing the largest of anything.

Now this whole scenario isn’t as rip-roaringly funny as, for example, installing a system at the largest credit union in Saskatoon, but I have to admit that the hyperbole used in the press release completely distracted me from the point at hand, and has probably done a disservice to HandySoft. HandySoft may have done a fine job at Cambrian. They may have even written a great press release. But I didn’t get past the first paragraph where the big selling point was that the customer is the largest credit union in Winnipeg.

I sure hope that they’re not expecting any prospective customers to go on site visits there this time of year.

Update: an ebizQ editor emailed me within hours to say that they removed the superlative from the press release on their site. You can still find the original on HandySoft’s site here.

Content poetry

I look at James Kobielus’ blog once in a while to browse his insightful commentary on various technical subjects. I never expected poetry about content.

BPM en français

Although schooled in Canada where we all have to learn some degree of French, my French is dodgy at best (although, in my opinion, it tends to improve when I’ve been drinking). However, I noticed that my blog appeared on the blogroll of a French BPM blog that just started up, and I’ve been struggling through the language barrier to check it out. There’s no information on the author, but I was instantly endeared to him (?) when I read the following in his reasons for starting the blog:

le marketing bullshit est omniprésent

Isn’t that just too true in any language?

More on vendor blogs

I ususally don’t put too much stock in BPM vendor blogs. First of all, there’s not a lot of them (or at least, not a lot that I’ve seen), since I imagine that getting official sanction for writing a blog about your product or company is exponentially more difficult as your company gets larger. Secondly, they can disappear rather suddenly in this era of mergers and acquisitions. Thirdly, anybody who works for a vendor and has something interesting to say is probably too busy doing other things, like building the product, to spend much time blogging. And lastly, they’re always a bit self-promotional, even when they’re not a blatant PR/marketing soapbox. (Yes, I know, my blog is self-promotional, but I am my own PR and marketing department, so I’m required to do that, or I’d have to fire myself.)

I’ve been keeping an eye on Phil Gilbert’s blog — he’s the CTO at Lombardi. I don’t know him personally, although I’ve been seeing and hearing a lot about their product lately. He wrote a post last week about “BPM as a platform” that every BPM vendor and customer should read, because it tells it like it is: the days of departmental workflow/BPM systems are past, and it’s time to grow up and think about this as part of your infrastructure. In his words:

Further, while it is a platform, it is built to handle and give visibility to processes of all sizes – from human workflows to complex integration and event processing. Choosing to start down the “process excellence” path may very well start with a simple process – therefore it’s not a “sledgehammer for a nail.” It’s a “properly sized hammer for the nail” built on a solid foundation that allows many people to be building (hammering) at once. And because of this, it scales very well from an administrative perspective. You can build one process, or you can build twenty. Sequentially, or all at once. Guess what? The maintenance of the platform is identical!

He also talks about how the real value of BPM isn’t process automation, it’s the data that the BPMS captures about the process along the way, which can then feed back into the process/performance improvement cycle and provide far more improvement than the original process automation.

He takes an unnecessary jab at Pegasystems (“the best BPM platforms aren’t some rules-engine based thing”) which probably indicates where Lombardi is getting hit from a competitive standpoint, and the writings a bit stilted, but that shows that it’s really coming from him, not being polished by a handler before it’s released. And the fact that the blog’s on Typepad rather than hosted on the Lombardi site is also interesting: it makes at least a token statement of independence on his part.

Worth checking out.