After the DST Advance conference in Phoenix two weeks ago, I headed north for a few days vacation at the Grand Canyon. Yes, there was snow, but it was lovely.

Back at work, I spent a day last week in Boston for the first-ever North American Software AG analyst event, attended by a collection of industry and financial analysts. It was a long-ish half day followed by lunch and opportunities for one-on-one meetings with executives: worth the short trip, especially considering that I managed to fly in and out between the snow storms that have been plaguing Boston this year. I didn’t live-blog this since there was a lot of material spread over the day, so had a chance to see some of the other analysts’ coverage published after the event, such as this summary from Peter Krensky of Aberdeen Group.

The focus of the event was squarely on the digital enterprise, a trend that I’m seeing at many other vendors but not so many customers yet. Software AG’s CEO, Karl-Heinz Streibich kicked off the day talking about how everywhere you turn, you hear about the digital enterprise: not just using digital technology, but having enough real-time data and devices integrated into our work and lives that they can be said to be truly digital. Streibich feels that companies with a basis in integration middleware – like Software AG with webMethods and other products – are in a good position to enable digital enterprises by integrating data, devices and systems of all types.

Although Software AG is not a household consumer name, its software is in 70% of the Fortune 1000, with a community of over 2M developers; it’s fair to say that you will likely interact with a company that uses Software AG products at least once per day: banks, airports and airlines, manufacturing, telecommunications, energy and more. Their revenues are split fairly evenly between Europe and the Americas, with a small amount in Asia Pacific. License revenues are 32% of the total, with maintenance and consulting splitting the remainder; this relatively low proportion of license revenue is an indicator of a mature software company, and not unexpected from a company more than 40 years old. I found a different representation of their revenues more interesting: they had 66% of their business in the “digital business” segment in 2014, expected to climb to 75% this year, which includes their portfolio minus the legacy ADABAS/NATURAL mainframe development tools. Impressive, considering that it was about a 50:50 split in 2010.  Part of this increase is likely due to their several acquisitions over that period, but also because they are repositioning their portfolio as the Digital Business Platform, a necessary shift towards the systems of engagement where more of the customer spend is happening. Based on the marketecture diagram, this platform forms a cut-out layer between back office core operational systems and front office customer engagement systems. Middleware, by any other name; but according to Streibich, more business logic is moving to the middleware layer, although this is what middleware vendors have been telling us for decades.

Part of this increase is likely due to their several acquisitions over that period, but also because they are repositioning their portfolio as the Digital Business Platform, a necessary shift towards the systems of engagement where more of the customer spend is happening. Based on the marketecture diagram, this platform forms a cut-out layer between back office core operational systems and front office customer engagement systems. Middleware, by any other name; but according to Streibich, more business logic is moving to the middleware layer, although this is what middleware vendors have been telling us for decades.

There’s definitely a lot of capable products in the portfolio that form this “development platform for digital business” – webMethods (integration and BPM), ARIS (BPA), Terracotta (in memory big data), Longjump (application PaaS), Metaquark (mobility), Alfabet, Apama, JackBe and more – but the key will be to see how well they can make them all work together to be a true platform rather than just a collection of Software AG-branded tools.

We had an in-depth presentation on their Digital Business Platform from Wolfram Jost, Software AG’s CTO; you can read the long version on their site, so I’ll just hit the high points. He started with some industry quotes, such as “every company will become a software company”, and one analyst firm’s laughable brainstorm for 2014, “Big Change”, but moved on to define digital business as having the following characteristics:

- Blurring the digital and physical world

- More influence of customers (on business direction as well as external perceptions)

- Combining people, business and physical things

- Agility, speed, scale, responsiveness

- “Supermaneuverable” business processes

- Disrupting existing business models

The problem with this shift in business models is that conventional business applications don’t support the way that the new breed of business applications are designed, developed, used and operated. Current applications and development techniques are still valuable, but are being pushed behind the scenes as core operational systems and packaged applications.

Software AG’s Digital Business Platform, then, is based on the premise that few packaged applications are useful in the face of business transformation and the required agility. We need tools to create adaptive applications – built to change, not to last – especially in front office customer engagement applications, replacing or augmenting packaged CRM and other applications. This is not fundamentally different from the message about any agile/adaptive/mashup/model-driven application development environment over the past few years, including BPMS; it’s interesting to see how a large vendor such as Software AG positions their entire portfolio around that message. In fact, one of their slides refers to the adaptive application platform as iBPMS, since the definition of iBPMS has expanded to include everything related to model-driven application development.

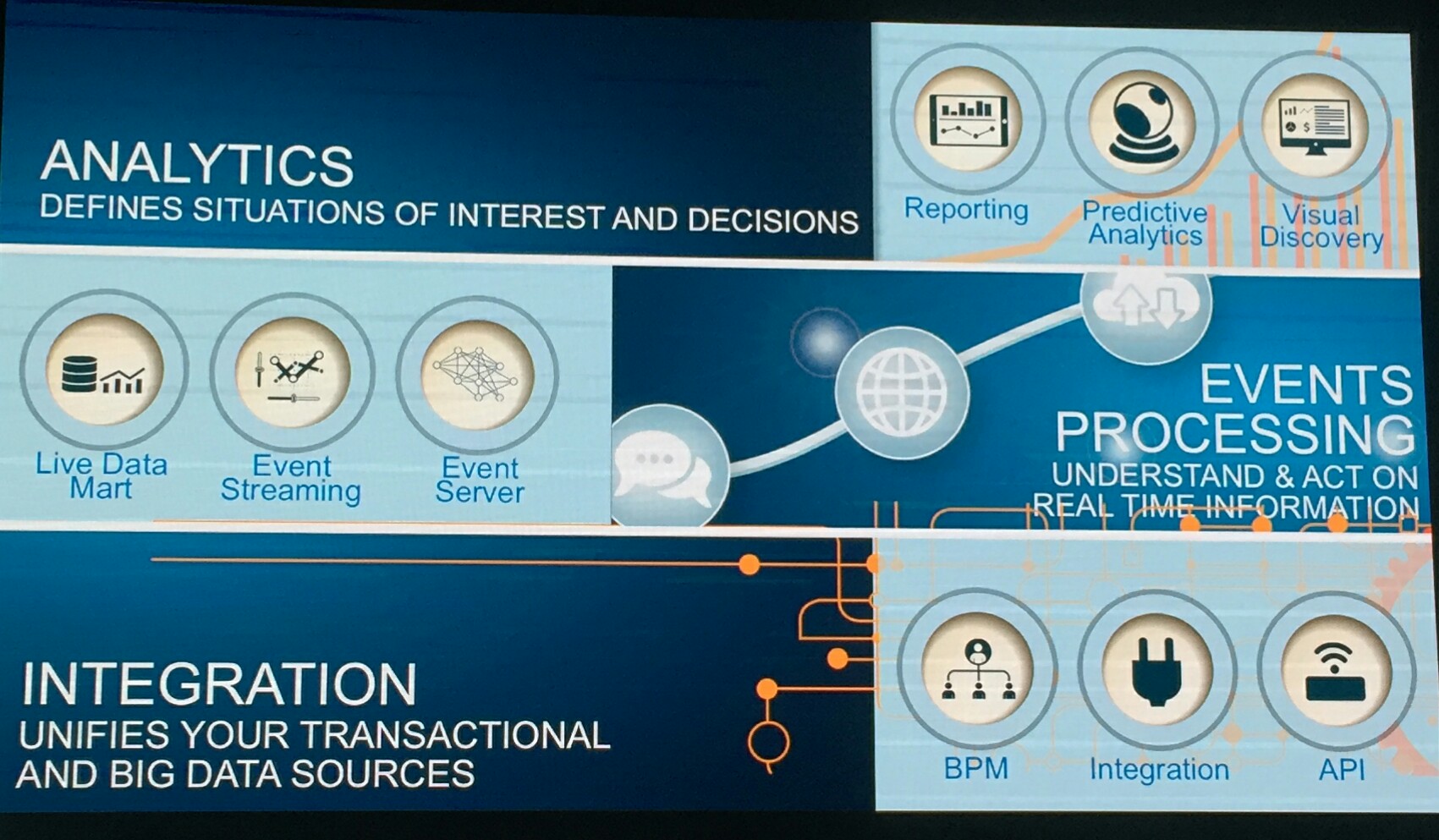

The core capabilities of their platform include intelligent business operations (webMethods Operational Intelligence, Apama Streaming Analytics); agile processes (webMethods BPM and AgileApps); integration (webMethods Integration and API Management); in-memory data fabric (Terracotta); and business and IT transformation (ARIS BPA and GRC, Alfabet IT Portfolio Management and EA Management). In a detailed slide overlaying their products, they also added a transaction processing capability to allow the inclusion of ADABAS-NATURAL, as well as the cloud offerings that they’ve released over the past year.

The core capabilities of their platform include intelligent business operations (webMethods Operational Intelligence, Apama Streaming Analytics); agile processes (webMethods BPM and AgileApps); integration (webMethods Integration and API Management); in-memory data fabric (Terracotta); and business and IT transformation (ARIS BPA and GRC, Alfabet IT Portfolio Management and EA Management). In a detailed slide overlaying their products, they also added a transaction processing capability to allow the inclusion of ADABAS-NATURAL, as well as the cloud offerings that they’ve released over the past year.

Jost dug further in to definitions of business application layers and architectural requirements. They provide the structure and linkages for event routing and event persistence frameworks, using relatively loose event-based coupling between their own products to allow them to be deployed selectively, but also (I imagine) to reduce the amount of refactoring of the products that would be required for tighter coupling. Their cloud IoT offering plays an interesting role by ingesting events from smart devices – developed via co-innovation with device companies such as Bosch and Siemens – for integration with on-premise business applications.

We then heard two shorter presentations, each followed by a panel. First was Eric Duffaut, the Chief Customer Officer, presenting their go-to-market strategy then moderating a panel with two partners, Audi Lucas of Wipro and Chris Brinton of Mosaic Data Science. Their GTM plan was fairly standard for a large enterprise software vendor, although they are improving effectiveness by having a single marketing team across all products as well as improving the sales productivity processes. Their partners are critical for scalability in this plan, and provide the necessary industry experience and solutions; both of the partner panelists talked about co-innovation with Software AG, rather than just providing resources trained on the products.

The second presentation and panel was led by John Bates, CMO and head of industry solutions; he was joined by a customer panel including Bryan Zigler of Boeing, Mark DuBrock of Standard&Poor, and Greg James of Outerwall. Bates discussed the role of industry solutions and solution accelerators, built by Software AG and/or partners, that provide a pre-built, customizable and adaptive application for fast deployment. They’re not using the Smart Process Application terminology that other vendors adopted from the Forrester trend from a couple of years ago, but it’s a very similar concept, and Bates announced the solution marketplace that they are launching to allow these to be easily discovered and purchased by customers.

My issue with solution accelerators and industry solutions in general is that many of these solutions are tied to a specific version of the underlying technology, and are templates rather than frameworks in that you change the solution itself during implementation: upgrades to platform may not be easily performed, and upgrades to the actual solution likely requires re-customizing for each deployed instance. I didn’t get a chance to ask Bates how SAG helps partners and customers to create and deploy more upgradable solutions, e.g., recommended technology guardrails; this is a sticky problem that every technology vendor needs to deal with.

Bates also discussed the patterns of digital disruption that can be seen in the marketplace, and how these are manifesting in three specific areas that they can help to address with their Digital Business Platform:

Bates also discussed the patterns of digital disruption that can be seen in the marketplace, and how these are manifesting in three specific areas that they can help to address with their Digital Business Platform:

- Connected customers, providing opportunities for location-based marketing and offers, automated concierge service, customer location tracking, demographic marketing

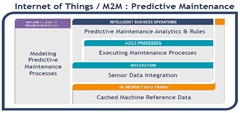

- Internet of Things/Machine-to-Machine (IoT/M2M), with real-time monitoring and diagnostics, and predictive maintenance

- Proactive risk and compliance, including proactive financial trade surveillance for unusual/rogue behavior

After a wrapup by Streibich, we received copies of his latest book, The Digital Enterprise, plus Thingalytics by Bates; ironically, these were paper rather than digital copies.

Disclosure: Software AG paid my airfare and hotel to attend this event, plus gave me a nice lunch and two books, but did not otherwise compensate me for my time nor for anything that I have written here.

This week, I’m in Las Vegas for Kofax Transform, although just as an attendee this year rather than a speaker; expect to see a few notes from here over the two days of the conference.

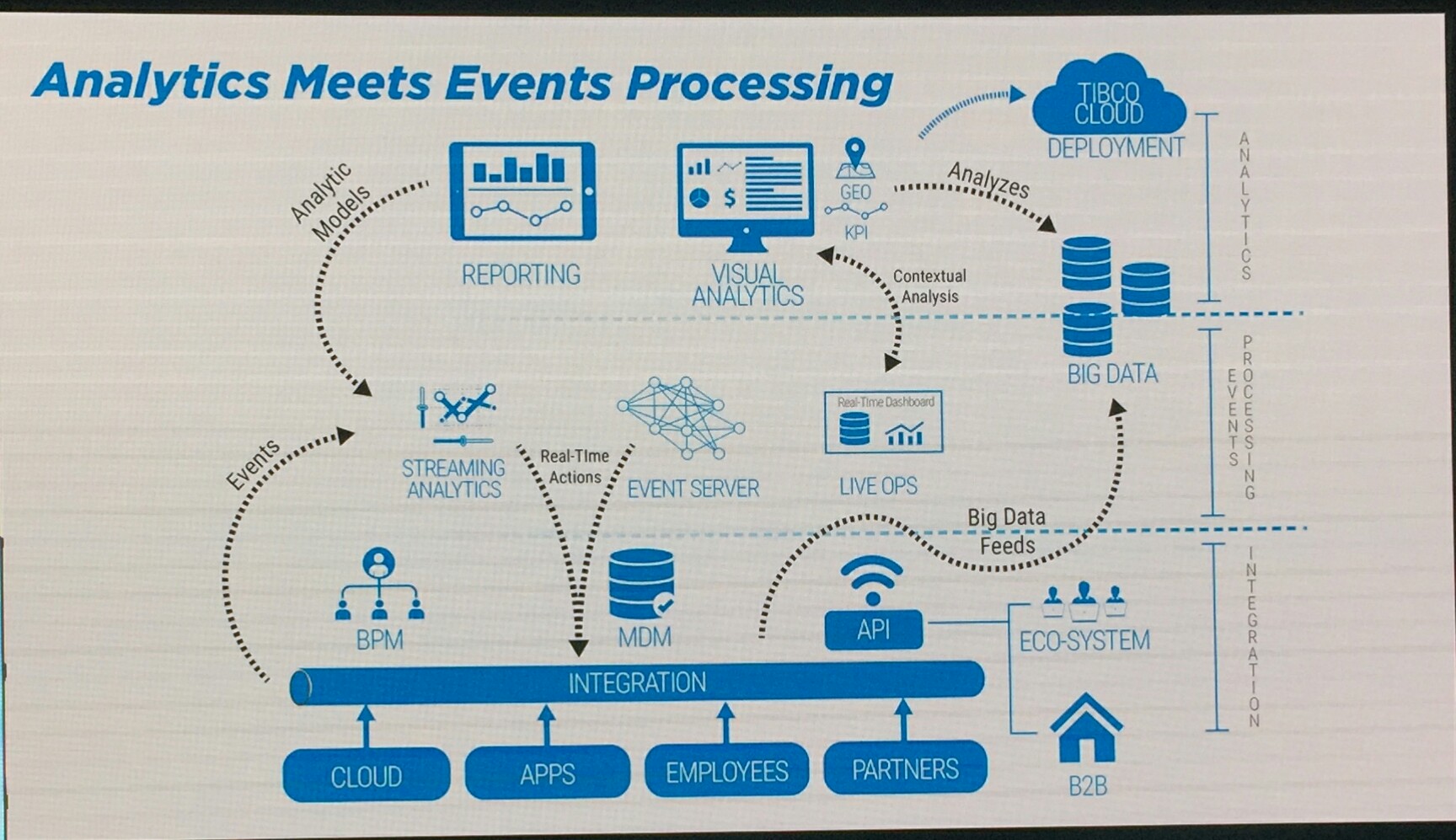

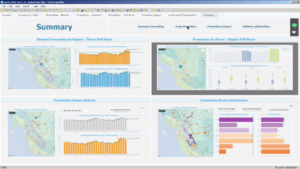

Brad Hopper, VP strategy for analytics, for a demo of Spotfire visual analytics while wearing a long blond wig (attempting to make a point about the importance of beauty, I think). He built an analytics dashboard while he talked, showing how easy it is to create visual analytics and trigger smart actions. He went on to talk about data preparation and cleansing, which can often take as much as 50% of an analyst’s time, and demonstrated importing a CSV file and using quick visualizations to expose and correct potential problems in the underlying data. As always, the Spotfire demos are very impressive; I don’t follow Spotfire closely enough to know what’s new, but it all looks pretty slick.

Brad Hopper, VP strategy for analytics, for a demo of Spotfire visual analytics while wearing a long blond wig (attempting to make a point about the importance of beauty, I think). He built an analytics dashboard while he talked, showing how easy it is to create visual analytics and trigger smart actions. He went on to talk about data preparation and cleansing, which can often take as much as 50% of an analyst’s time, and demonstrated importing a CSV file and using quick visualizations to expose and correct potential problems in the underlying data. As always, the Spotfire demos are very impressive; I don’t follow Spotfire closely enough to know what’s new, but it all looks pretty slick. Michael O’Connell, TIBCO’s chief analytics officer, came up to demonstrate a set of analytics applications for a fictitious coffee company: sales figures and drilldowns, with what-if predictions for planning promotions; and supply chain management and smart routing of product deliveries.

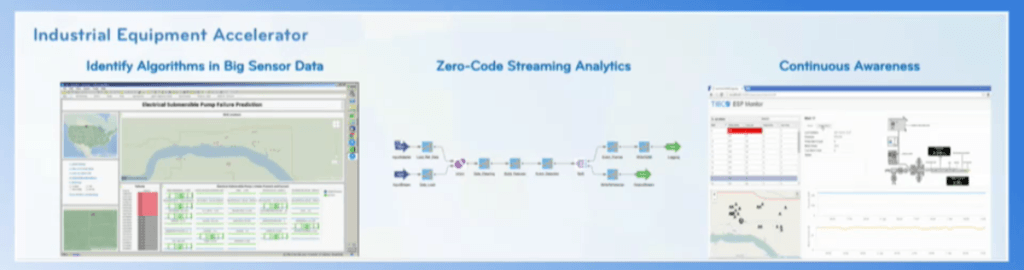

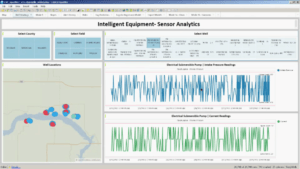

Michael O’Connell, TIBCO’s chief analytics officer, came up to demonstrate a set of analytics applications for a fictitious coffee company: sales figures and drilldowns, with what-if predictions for planning promotions; and supply chain management and smart routing of product deliveries. oil industry application that leverages sensor analytics to maximize equipment productivity by initiating preventative maintenance when the events emitted by the device indicate that failure may be imminent. He showed a more comprehensive interface that would be used in the head office for real-time monitoring and analysis, and a simpler tablet interface for field service personnel to receive information about wells requiring service. Palmer finished the analytics segment with a brief look at LiveView Web, a zero-code environment for building operational intelligence dashboards.

oil industry application that leverages sensor analytics to maximize equipment productivity by initiating preventative maintenance when the events emitted by the device indicate that failure may be imminent. He showed a more comprehensive interface that would be used in the head office for real-time monitoring and analysis, and a simpler tablet interface for field service personnel to receive information about wells requiring service. Palmer finished the analytics segment with a brief look at LiveView Web, a zero-code environment for building operational intelligence dashboards. After the break, Adam Steltzner, NASA’s lead engineer on the Mars Rover and author of The Right Kind of Crazy: A True Story of Teamwork, Leadership, and High-Stakes Innovation, talked about innovation, collaboration and decision-making under pressure. Check out the replay of the keynote for his talk, a fascinating story of the team that built and landed the Mars landing vehicles, along with some practical tips for leaders to foster exploration and innovation in teams.

After the break, Adam Steltzner, NASA’s lead engineer on the Mars Rover and author of The Right Kind of Crazy: A True Story of Teamwork, Leadership, and High-Stakes Innovation, talked about innovation, collaboration and decision-making under pressure. Check out the replay of the keynote for his talk, a fascinating story of the team that built and landed the Mars landing vehicles, along with some practical tips for leaders to foster exploration and innovation in teams.